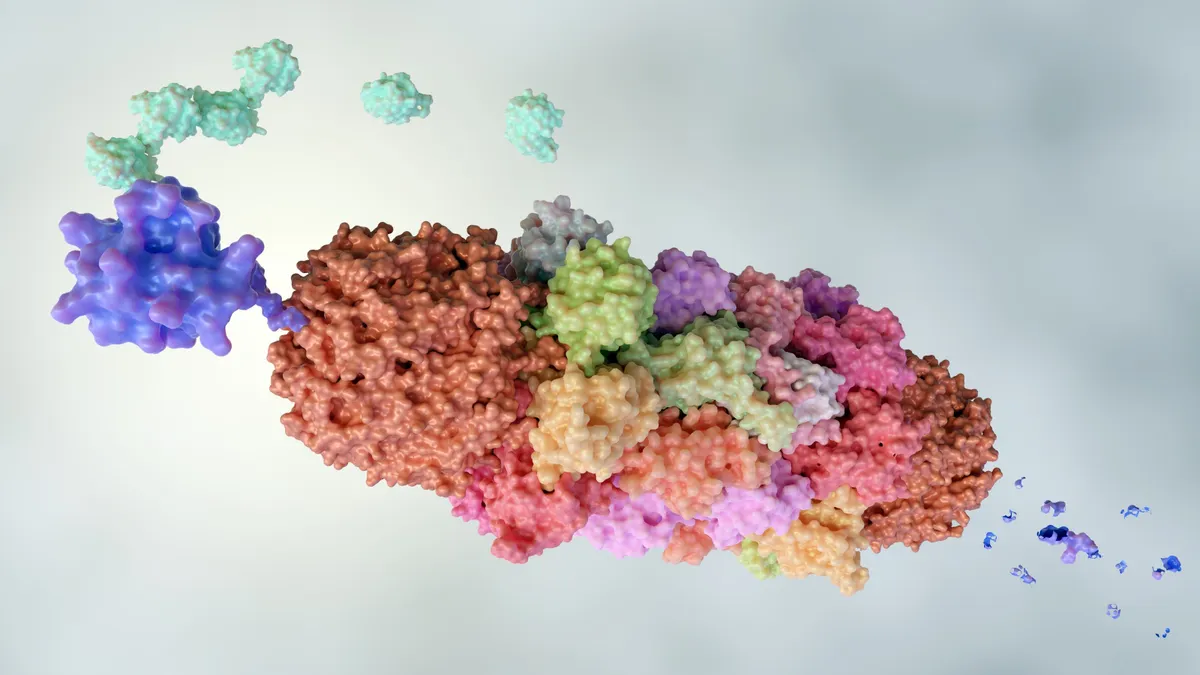

There’s a growing precision challenge in central nervous system (CNS) and rare-disease trials, where even small errors in measurement can have major implications.

Using inadequately validated clinical outcome assessments (COAs) can mean the difference between detecting a treatment effect and missing it entirely.

Clinical researchers recognize this pressure. In a new research report, “Unlocking the Power of COAs in Clinical Research,” 94% of respondents said they desired a greater understanding of endpoint and COA selection.

To explore the nuances of COA selection in rare-disease trials, BioPharma Dive spoke with Lynsey Psimas, Ph.D., director of business development at Pearson Research and a licensed clinical psychologist.

As Psimas explained, bridging meaningful change and measurable progress means “translating what matters to patients into what can be measured reliably. It’s aligning psychometric rigor with lived experience, capturing change that is both statistically defensible and personally significant.”

Why subtle changes and COA sensitivity matter in rare disease and CNS

Findings from the COA study show that for more than half of clinical researchers (54%), “sensitivity to meaningful clinical change” is now the top factor in COA selection.

But achieving that level of sensitivity is especially challenging in CNS and rare-disease research. Several factors contribute to the complexity:

- Heterogeneous populations: Patient profiles can vary widely, sample sizes are small, and symptoms often fluctuate unpredictably.

- Participation barriers: Communication or motor limits can restrict how patients engage with standard assessments, while floor and ceiling effects may obscure true progress.

- Study variability: Long study durations and changing raters can introduce additional inconsistencies, making both sensitivity and standardization essential.

The challenge becomes even more pronounced in the confidence gap. According to the Pearson report, while 82% of researchers said they felt confident they could access properly validated COAs, nearly one in five did not.

In these high-stakes therapeutic areas, even modest shifts can carry meaningful impact. For example, a patient initiating more social interaction or a child managing daily routines independently may reflect meaningful progress, yet those changes often go unseen in raw scores.

“Gains in processing speed or executive function, as measured by tools like RBANS or D-KEFS, may transform daily functioning even if total scores change minimally,” Psimas says. “And adaptive improvements on the Vineland-3 often reflect the earliest signs of real-world progress.”

Ultimately, COA design, selection, and implementation determine whether a study can detect meaningful clinical change. Every aspect, from licensing to training, must be optimized for rare and complex populations.

Where standard measurement often falls short

Part of the sensitivity challenge stems from the limits of traditional measurement tools, many of which weren’t built with these populations in mind.

Standardized endpoints and generic COAs have long been benchmarks in clinical research, but in CNS and rare disease, they often fail to capture what truly matters to patients.

“In CNS and rare-disease studies, you often see tools borrowed from other indications that don’t reflect the lived experience of these patients,” Psimas explained. “They may lack content validity, include items that are too easy or too difficult, or rely on recall periods and administration formats that don’t suit the population. The result can be underdetection of change that is meaningful to patients and families.”

The broader data echoes that reality. In the recent Pearson study, 64% of researchers cited translation quality as a challenge, 52% reported tracking issues in rater training, and 63% said they needed clearer regulatory guidance.

Each reflects a different dimension of the same problem. When tools aren’t fully aligned with their target populations or consistently applied across languages and raters, sensitivity suffers, and subtle but meaningful changes can go unnoticed.

How to fine-tune COAs for rare and complex populations

Addressing sensitivity and confidence challenges requires designing and implementing COAs that truly reflect the populations they’re built to measure.

As Psimas explained, improving precision starts with aligning every aspect of a COA, ranging from structure to training, with the realities of the patient population. “It’s about designing tools that are psychometrically sound but also flexible enough to capture what’s meaningful in everyday life,” she said.

When evaluating and refining a COA for rare and complex populations, researchers can focus on five key attributes:

- Valid and reliable endpoints

- Sensitivity to small but meaningful change

- Minimal floor/ceiling effects

- Flexible administration

- Cultural and linguistic adaptability

Early planning also matters. Many of the challenges researchers face, such as licensing delays, translation issues, and inconsistent rater training, stem from COA decisions made too late in the process.

Refining COAs for rare and complex populations is less about reinventing the framework and more about precision: ensuring every design choice, training protocol, and cultural adaptation brings researchers closer to capturing meaningful progress.

Bridging meaningful change and measurable progress

COA selection is complex work, balancing scientific rigor with what truly matters to patients. It’s no wonder that 60% of researchers in the COA study said expert consultation would make the process easier.

Pearson Research partners with teams to simplify that complexity. Through validated tools such as Vineland-3, RBANS, D-KEFS, and Bayley-4, Pearson helps researchers select, validate, and implement COAs that bridge meaningful change and measurable progress.

Read the full report, “Unlocking the Power of COAs in Clinical Research,” for deeper insights into today’s most common COA challenges and practical ways to address them.